Transforming Variables

Transforming variables in regression is often a necessity. Both independent and dependent variables may need to be transformed (for various reasons).

Transforming the Dependent variable:

Homoscedasticity of the residuals is an important assumption of linear regression modeling. One way of achieving this symmetry is through the transformation of the target variable. Skewed or extremely non-normal data will give us problems, therefore transforming the target is an important part of model building.

Independent variables:

While independent variables need not be normally distributed, it is extremely important that there is a linear relationship between each regressor and the target (it’s logit). Transformation is a way to fix the non-linearity problem, if it exists. Transformations can also help with high leverage values or outliers.

Note that histograms are not the best way to diagnose non-linearity issues, though they are useful at visualizing distributions. Scatterplots are a far easier way to assess linearity in the data. The bottom line is that transformation may help with linearity, which will in turn help to achieve a better model fit.

From a model building perspective, transformations can also help reducing complexity of a model. Reducing polynomials or removing the need for interactions may also help a better fit.

Lucky for us sklearn, provides several power transformation methods. Two of the most frequently used are the Box-Cox transformation and Yeo-Johnson transformation procedures. Both belong to the family of power transformations and are used to make distributions more normally distributed. Note that the Box-Cox method requires positive inputs while Yeo-Johnson works with either positive or negative values.

In this section, I also wanted to demonstrate how to use the quantile transformer of sklearn. It is also set up to achieve a normal distribution. Its advantages include the reduction of impact from outliers or influential observations. A major drawback of this transformation is its potential for non-linear distortion. Also, values that fall beyond the fitted range are added to the bounds of the distribution.

Working with the Boston Housing data, I ingested the data the same way as before:

Import pandas as pd from sklearn.datasets import load_boston boston= load_boston() print(boston.data.shape) #Create data frame: target boston_target_df = pd.DataFrame(data=boston.target,columns=['MEDV']) boston_target_df.head() #Create data frame: features boston_features_df = pd.DataFrame(data=boston.data,columns=boston.feature_names) boston_features_df.head()

I also ingested a pickled dataset. This data was prepared in a previous session when I standardized data. Since Box-Cox transformation requires non-negative data, this dataset was standardized with the MinMax scaler of sklearn, which rescaled the data resulting in non-negative values.

##### Pickle #Use pickled standardized data for the box-cox transformation #Already standardized data is being used and we can add 1 to the values #Box-cox transform needs positive values import pickle boston_stdr_df=pd.read_pickle('features.pkl') #read pickle boston_stdr_df.head()

Notice that the dataset still contains 0 values, which are also prohibited in Box-Cox transformation. In order to get around this problem, I added 1 to each value.

#add one to each value to get rid of 0 values numeric_cols = [col for col in boston_stdr_df if boston_stdr_df[col].dtype.kind != 'O'] numeric_cols boston_stdr_df[numeric_cols] += 1 boston_stdr_df.head()

Note that in this code I added 1 to a binary variable (CHAS), which was not standardized. So now we are ready for Box-Cox transformation by selected variables.

Again, when building a model, one would want to transform the data separately for the test and train datasets!

import pandas as pd import numpy as np from sklearn.preprocessing import StandardScaler from sklearn.compose import ColumnTransformer from sklearn.preprocessing import PowerTransformer #Box-Cox Transform column_trans = ColumnTransformer( [('CRIM_bc', PowerTransformer(method='box-cox', standardize=True), ['CRIM']), ('ZN_bc', PowerTransformer(method='box-cox', standardize=True), ['ZN']), ('INDUS_bc', PowerTransformer(method='box-cox', standardize=True), ['INDUS']), ('NOX_bc', PowerTransformer(method='box-cox', standardize=True), ['NOX']), ('RM_bc', PowerTransformer(method='box-cox', standardize=True), ['RM']), ('AGE_bc', PowerTransformer(method='box-cox', standardize=True), ['AGE']), ('DIS_bc', PowerTransformer(method='box-cox', standardize=True), ['DIS']), ('RAD_bc', PowerTransformer(method='box-cox', standardize=True), ['RAD']), ('TAX_bc', PowerTransformer(method='box-cox', standardize=True), ['TAX']), ('PTRATIO_bc', PowerTransformer(method='box-cox', standardize=True), ['PTRATIO']), ('B_bc', PowerTransformer(method='box-cox', standardize=True), ['B']), ('LSTAT_bc', PowerTransformer(method='box-cox', standardize=True), ['LSTAT']), ]) transformed_boxcox = column_trans.fit_transform(boston_stdr_df) new_cols = ['CRIM_bc', 'ZN_bc', 'INDUS_bc', 'NOX_bc', 'RM_bc', 'AGE_bc', 'DIS_bc','RAD_bc','TAX_bc','PTRATIO_bc', 'B_bc', 'LSTAT_bc'] Boston_bc = pd.DataFrame(transformed_boxcox, columns=new_cols) pd.concat([ Boston_bc], axis = 1) Boston_bc.head()

When executing the Yeo-Johnson transformation, it is not necessary that the data contain values greater than 0. As a result, I used he original dataset (boston_features_df).

#yeo-johnson Transform #Turn on standradize #YJ takes negative values so we can use original features df column_trans = ColumnTransformer( [('CRIM_yj', PowerTransformer(method='yeo-johnson', standardize=True), ['CRIM']), ('ZN_yj', PowerTransformer(method='yeo-johnson', standardize=True), ['ZN']), ('INDUS_yj', PowerTransformer(method='yeo-johnson', standardize=True), ['INDUS']), ('NOX_yj', PowerTransformer(method='yeo-johnson', standardize=True), ['NOX']), ('RM_yj', PowerTransformer(method='yeo-johnson', standardize=True), ['RM']), ('AGE_yj', PowerTransformer(method='yeo-johnson', standardize=True), ['AGE']), ('DIS_yj', PowerTransformer(method='yeo-johnson', standardize=True), ['DIS']), ('RAD_yj', PowerTransformer(method='yeo-johnson', standardize=True), ['RAD']), ('TAX_yj', PowerTransformer(method='yeo-johnson', standardize=True), ['TAX']), ('PTRATIO_yj', PowerTransformer(method='yeo-johnson', standardize=True), ['PTRATIO']), ('B_yj', PowerTransformer(method='yeo-johnson', standardize=True), ['B']), ('LSTAT_yj', PowerTransformer(method='yeo-johnson', standardize=True), ['LSTAT']), ]) transformed_yeojohnson = column_trans.fit_transform(boston_features_df) new_cols = ['CRIM_yj', 'ZN_yj', 'INDUS_yj', 'NOX_yj', 'RM_yj', 'AGE_yj', 'DIS_yj','RAD_yj','TAX_yj','PTRATIO_yj', 'B_yj', 'LSTAT_yj'] Boston_yj = pd.DataFrame(transformed_yeojohnson, columns=new_cols) pd.concat([ Boston_yj], axis = 1) Boston_yj.head()

Finally, I wanted to demonstrate the use of a transformation method that is different from the power transformation methods described above. The quantile distribution is non-parametric, and one should use it cautiously because of its impact on outliers or values in tails of distributions. Nevertheless, I find this transformation very useful at times.

import pandas as pd from sklearn.preprocessing import QuantileTransformer def unique_values(x): return x.unique().tolist() df = boston_features_df columns = ['CRIM', 'ZN', 'INDUS', 'NOX', 'RM', 'AGE', 'DIS','RAD','TAX','PTRATIO', 'B', 'LSTAT', 'CHAS'] qt = QuantileTransformer() q_features = qt.fit_transform(df) suffix = '__qt' qdf = df.join(pd.DataFrame(q_features, columns=columns), rsuffix=suffix) for col in columns: q_col = f'' #print({col: qdf[col].nunique(), q_col: qdf[q_col].nunique()}) gdf = qdf.groupby(col)[q_col].agg([pd.Series.nunique, unique_values]) #print(gdf.sort_values('nunique', ascending=False).head()) transformed_qt = qt.fit_transform(boston_features_df) new_cols = ['CRIM_qt', 'ZN_qt', 'INDUS_qt', 'NOX_qt', 'RM_qt', 'AGE_qt', 'DIS_qt','RAD_qt','TAX_qt','PTRATIO_qt', 'B_qt', 'LSTAT_qt'] Boston_qt = pd.DataFrame(transformed_yeojohnson, columns=new_cols) pd.concat([ Boston_qt], axis = 1) Boston_qt.head()

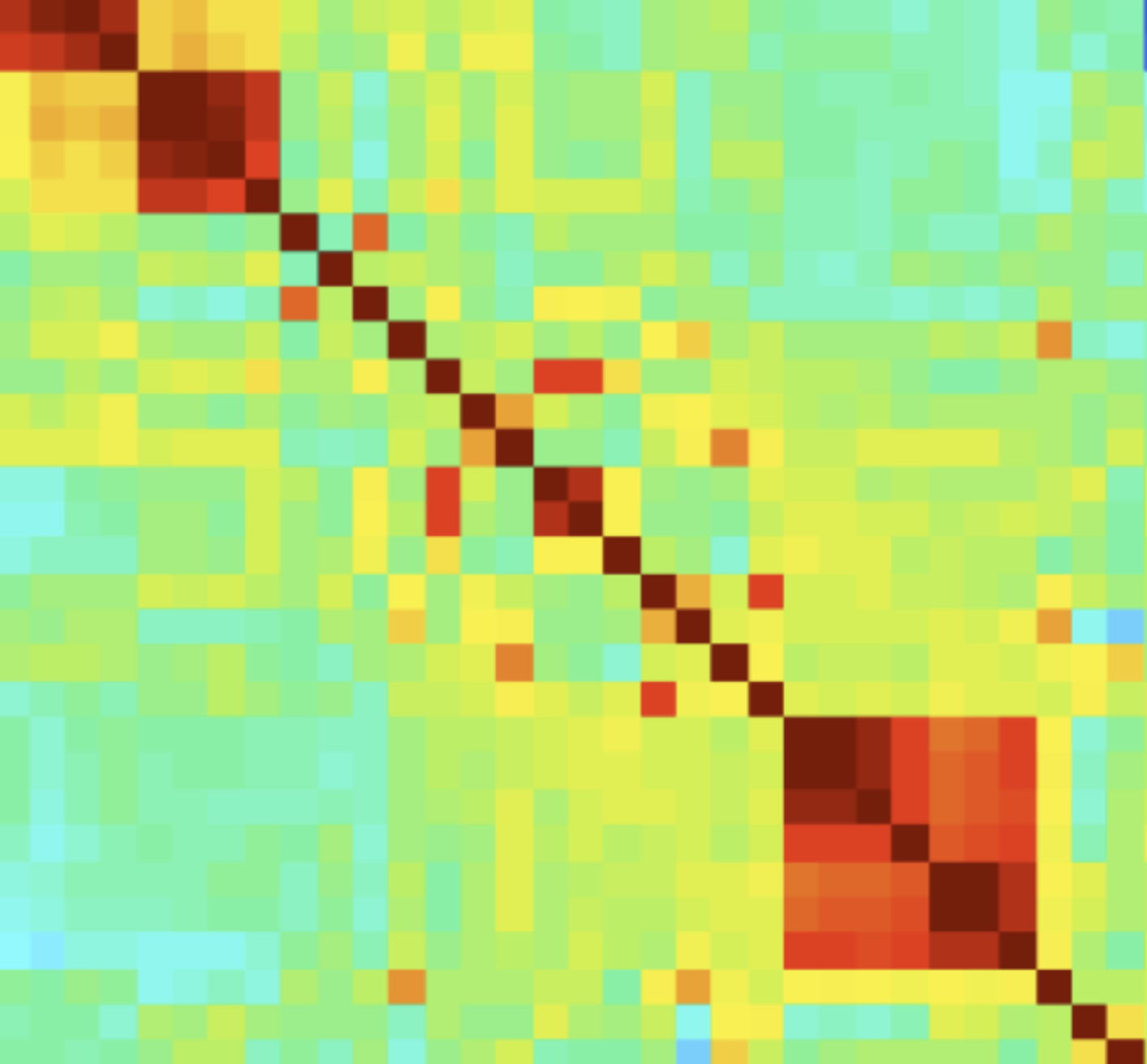

So let us compare how the three transformation methods changed the distribution of our data. In order to demonstrate the impact of transformation, I picked two variables, CRIM and NOX. The histograms and scatter plots of both variables transformed with the three different transformation methods were compared to the untransformed plots.

#Plot to demonstrate the effect of transformation import matplotlib.pyplot as plt plt.style.use('ggplot') import matplotlib.gridspec as gridspec fig1 = plt.figure(constrained_layout=True) spec1 = gridspec.GridSpec(ncols=2, nrows=2, figure=fig1) f1_ax1 = fig1.add_subplot(spec1[0, 0]) boston_features_df['CRIM'].plot(kind='hist',edgecolor='black',figsize=(6,3)) plt.title('CRIM', size=10) f1_ax2 = fig1.add_subplot(spec1[0, 1]) Boston_bc['CRIM_bc'].plot(kind='hist',edgecolor='black',figsize=(6,3)) plt.title('CRIM Box-Cox Transformed', size=10) f1_ax3 = fig1.add_subplot(spec1[1, 0]) Boston_yj['CRIM_yj'].plot(kind='hist',edgecolor='black',figsize=(6,3)) plt.title('CRIM Yeo-Johnson Transformed', size=10) f1_ax4 = fig1.add_subplot(spec1[1, 1]) Boston_qt['CRIM_qt'].plot(kind='hist',edgecolor='black',figsize=(6,3)) plt.title('CRIM Quantile Transformed', size=10)

#Scatter plots import matplotlib.gridspec as gridspec fig1 = plt.figure(constrained_layout=True) spec1 = gridspec.GridSpec(ncols=2, nrows=2, figure=fig1) f1_ax1 = fig1.add_subplot(spec1[0, 0]) plt.scatter(boston_features_df['CRIM'], boston_target_df['MEDV']) plt.xlabel('CRIM Untransformed') plt.ylabel("MEDV") f1_ax2 = fig1.add_subplot(spec1[0, 1]) plt.scatter(Boston_bc['CRIM_bc'], boston_target_df['MEDV']) plt.xlabel('CRIM Box-Cox Transformed') plt.ylabel('MEDV') f1_ax3 = fig1.add_subplot(spec1[1, 0]) plt.scatter(Boston_yj['CRIM_yj'], boston_target_df['MEDV']) plt.xlabel('CRIM Yeo-Johnson Transformed') plt.ylabel('MEDV') f1_ax4 = fig1.add_subplot(spec1[1, 1]) plt.scatter(Boston_qt['CRIM_qt'], boston_target_df['MEDV']) plt.xlabel('CRIM Quantile Transformed') plt.ylabel('MEDV')

#### fig1 = plt.figure(constrained_layout=True) spec1 = gridspec.GridSpec(ncols=2, nrows=2, figure=fig1) f1_ax3 = fig1.add_subplot(spec1[0, 0]) boston_features_df['NOX'].plot(kind='hist',edgecolor='black',figsize=(6,3)) plt.title('NOX', size=10) f1_ax4 = fig1.add_subplot(spec1[0, 1]) Boston_bc['NOX_bc'].plot(kind='hist',edgecolor='black',figsize=(6,3)) plt.title('NOX Box-Cox Transformed', size=10) f1_ax3 = fig1.add_subplot(spec1[1, 0]) Boston_yj['NOX_yj'].plot(kind='hist',edgecolor='black',figsize=(6,3)) plt.title('NOX Yeo-Johnson Transformed', size=10) f1_ax4 = fig1.add_subplot(spec1[1, 1]) Boston_qt['NOX_qt'].plot(kind='hist',edgecolor='black',figsize=(6,3)) plt.title('NOX Quantile Transformed', size=10)

#Scatter plots import matplotlib.gridspec as gridspec fig1 = plt.figure(constrained_layout=True) spec1 = gridspec.GridSpec(ncols=2, nrows=2, figure=fig1) f1_ax1 = fig1.add_subplot(spec1[0, 0]) plt.scatter(boston_features_df['NOX'], boston_target_df['MEDV']) plt.xlabel('NOX Untransformed') plt.ylabel("MEDV") f1_ax2 = fig1.add_subplot(spec1[0, 1]) plt.scatter(Boston_bc['NOX_bc'], boston_target_df['MEDV']) plt.xlabel('NOX Box-Cox Transformed') plt.ylabel('MEDV') f1_ax3 = fig1.add_subplot(spec1[1, 0]) plt.scatter(Boston_yj['NOX_yj'], boston_target_df['MEDV']) plt.xlabel('NOX Yeo-Johnson Transformed') plt.ylabel('MEDV') f1_ax4 = fig1.add_subplot(spec1[1, 1]) plt.scatter(Boston_qt['NOX_qt'], boston_target_df['MEDV']) plt.xlabel('NOX Quantile Transformed') plt.ylabel('MEDV')