An Introduction to Clustering Techniques

““If you take a galaxy and try to make it bigger, it becomes a cluster of galaxies, not a galaxy. If you try to make it smaller than that, it seems to blow itself apart.””

What is clustering?

Clustering is a technique designed to find subgroups in a larger set. When partitioning the data into subgroups – often called clusters – analysts intend to distribute data so that the cases within a group are very similar, while they are very different when compared to cases in other clusters. Clustering belongs to the realm of unsupervised problems because the intent is to find a structure in the data. In other words, clustering is usually completed to gain insight into data distribution. This is different from supervised problems that target to predict an outcome, e.g. regression. Since clustering is designed to create homogenous subgroups within a data set, it can be thought of as simplification/dimension reduction algorithm.

Types of Clustering:

A lot of clustering methods exist, and a plethora of options are available in sklearn.cluster. Each clustering algorithm offers a “class” and a “function”. The class method uses the fit method that provides labels for each observation in the labels_attribute. The function provides and array of labels instead.

| Classes | Description |

|---|---|

| cluster.AffinityPropagation | Perform Affinity Propagation Clustering of data. |

| cluster.AgglomerativeClustering | Agglomerative Clustering |

| cluster.Birch | Implements the Birch clustering algorithm. |

| cluster.DBSCAN | Perform DBSCAN clustering from vector array or distance matrix. |

| cluster.FeatureAgglomeration | Agglomerate features. |

| cluster.KMeans | K-Means clustering. |

| cluster.MiniBatchKMeans | Mini-Batch K-Means clustering. |

| cluster.MeanShift | Mean shift clustering using a flat kernel. |

| cluster.OPTICS | Estimate clustering structure from vector array. |

| cluster.SpectralClustering | Apply clustering to a projection of the normalized Laplacian. |

| cluster.SpectralBiclustering | Spectral biclustering. |

| cluster.SpectralCoclustering | Spectral Co-Clustering algorithm. |

| Functions | Description |

|---|---|

| cluster.affinity_propagation | Perform Affinity Propagation Clustering of data |

| cluster.cluster_optics_dbscan | Performs DBSCAN extraction for an arbitrary epsilon. |

| cluster.cluster_optics_xi | Automatically extract clusters according to the Xi-steep method. |

| cluster.compute_optics_graph | Computes the OPTICS reachability graph. |

| cluster.dbscan | Perform DBSCAN clustering from vector array or distance matrix. |

| cluster.estimate_bandwidth | Estimate the bandwidth to use with the mean-shift algorithm. |

| cluster.k_means | K-means clustering algorithm. |

| cluster.mean_shift | Perform mean shift clustering of data using a flat kernel. |

| cluster.spectral_clustering | Apply clustering to a projection of the normalized Laplacian. |

| cluster.ward_tree | Ward clustering based on a Feature matrix. |

Source: API Reference -sklearn.clustering

K-Means Clustering:

A simple yet effective method of creating non-overlapping clusters is K-Means Clustering, which starts out by specifying the number of desired clusters. The algorithm assigns each case to one of the clusters so that variation within each cluster is minimized. First, the central point of each cluster is computed, which is the mean of each observation within the cluster, and then the distances of cases are computed vs. the central point. Cases are assigned to clusters to minimize the sum of these distances.

Mean Shift Clustering (MSC):

A sliding window based algorithm, MSC is designed to discover dense areas of data points. It also uses a center point approach but updates cases for center points as a sliding window moves over them. As the window shifts, the central points are recalculated and cases are assigned to a cluster if appropriate, e.g. the density of the cluster increases. The window shifts until there is no more cases are available to increase the density of a cluster.

Density-Based Spatial Clustering of Applications with Noise (DBSCAN):

The approach is very similar to MSC but the algorithm starts with arbitrary starting points, and a neighborhood is defined around it. If a sufficient number of points in a neighborhood are discovered then clustering starts otherwise a case is considered noise. All points in a neighborhood get to be labeled as either part of a segment or noise. When all points are visited, the algorithm starts with a new neighborhood. DBSCAN can handle outliers with ease as a result, but performs poorer if density in a data set varies a lot.

Expectation – Maximization (EM) Clustering:

EM typically uses a model called Gaussian Mixture Model, which provides a major advantage over K-means because it assumes that the data is normally distributed, therefore the mean and the standard deviation are both considered when assigning cases to a cluster. (K-means only uses the mean). In EM-GMM, each distinct normal (Gaussian) distribution is assigned to a cluster. This is a probability based technique, which assumes that points closer to the mean have a higher probability to belonging to that cluster. A major advantage of EM-GMM over K-means is that the shape of clusters do not need to be circles, they can take ellipse shapes providing additional flexibility.

K-means is equivalent to the expectation-maximization algorithm with a small, all-equal, diagonal covariance matrix.

Agglomerative Hierarchical Clustering:

Hierarchical clustering can be either bottom-up or top-down. Bottom-up algorithms treat each case as a cluster and merge pairs of clusters until all clusters are merged. Merging is done based on distance of observations from each other and a criterion called linkage, which defines dissimilarity among sets. The hierarchical clusters are often depicted as a tree called a dendrogram. Data scientists often use the general linkage method to collapse clusters into one. There are distinct versions of linkage, some seek to maximize the dissimilarity amongst clusters, while others minimize dissimilarity within clusters. Other methods are also available.

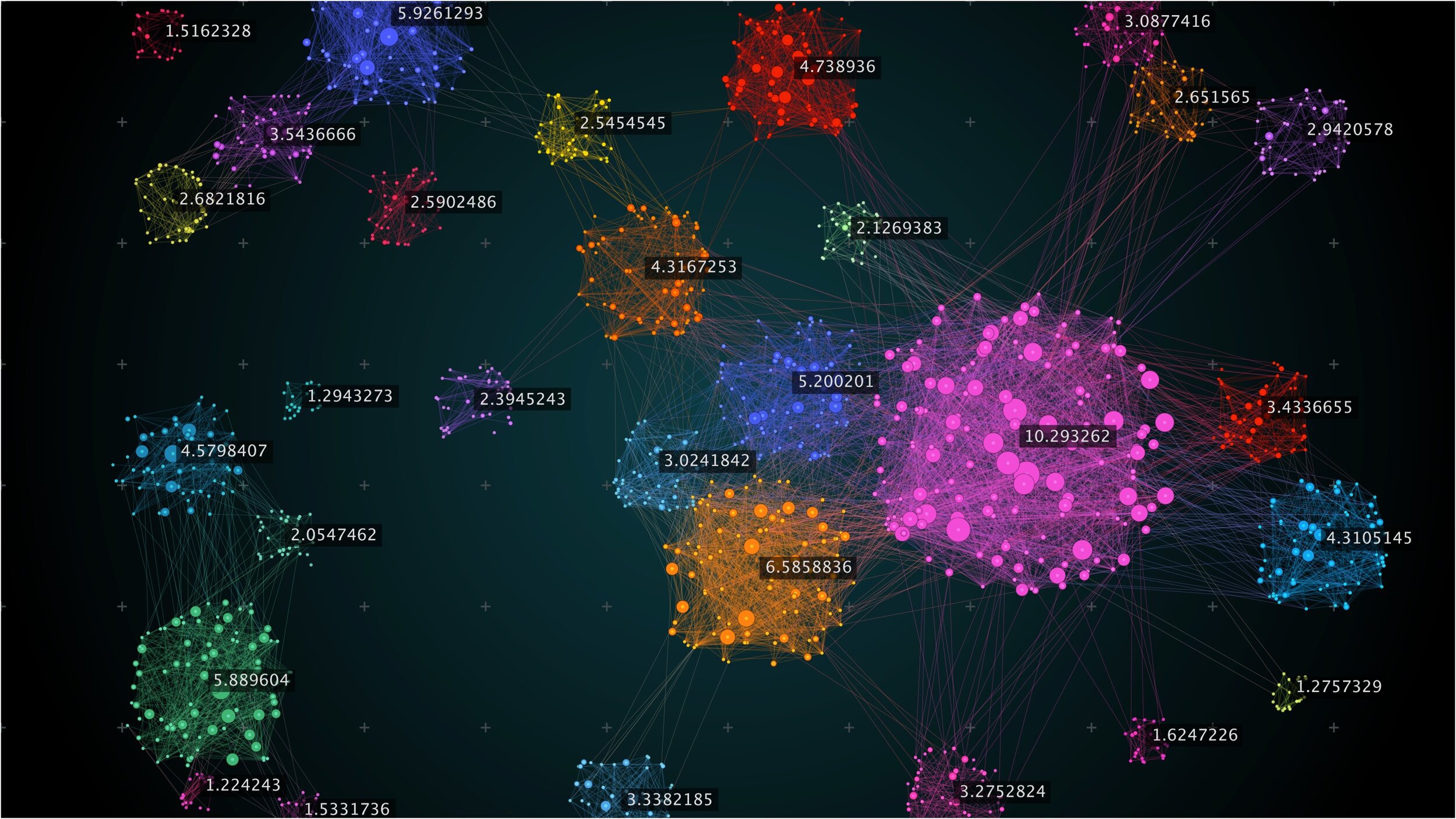

Clustering Based on Visualization:

Several other techniques exist that allow data scientists to reduce the dimensions of data (e.g. create subgroups within a data set). Some of these methods are based on visualization vs. distance or probability.

Self-Organizing Maps (SOM):

SOMs are also referred to as Kohonen Networks, named after their inventor, Teuvo Kohonen, according to Ahn and Syn. The technique visually reduces data to lower dimensions on a map thereby depicting similarities among observations. Weight vectors are created and each data points’ vector is compared to each weight vector. Vectors are selected randomly for representation and every vector is used to compute the weight that is most similar to a specific input vector. Winning nodes are referred to as Best Matching Unit (BMU). As nodes get closer to a BMU, their weights change and clusters are formed.

Link: http://www.pitt.edu/~is2470pb/Spring05/FinalProjects/Group1a/tutorial/som.html

Link: http://www.ai-junkie.com/ann/som/som1.html

Quality of Clustering and Machine Learning:

As discussed, clustering can take many forms. In each case the quality of the algorithm needs to be tested and machine learning may be used to compare the results and select the best algorithm.

Partitioning algorithm based models need to be compared with the help of a criterion. In case of hierarchical algorithms, the decomposition of the set of data can also be examined by a specified criterion. Density based models are density functions whose outputs can be evaluated and compared in machine learning and Model-based algorithms produce fit statistics that can be evaluated.

Link: http://www.stat.columbia.edu/~madigan/W2025/notes/clustering.pdf

As a result, machine learning is essential when using clustering methods to select the most stable and useful clusters possible. Machine learning can also be used to train and tune clustering algorithms. For example, it can be used to select dissimilarity measures and linkage functions to name a few.

Updated on May 1.